Changelog

Follow up on the latest improvements and updates.

RSS

new

2.0.0

Major Features

MCP (Model Context Protocol) Support - The Future is Here!

Wingman Ultra now supports

MCP servers

, which means you can connect external tools and services to your Wingmen. Think of it as a plugin system that works with any MCP-compatible tool. The really cool thing is that MCP servers can either run locally on your machine and interact with programs you might already be using (like Notion, Figma etc.) or they can be hosted remotely (if they’re stateless and don’t need local file access). It’s basically like a Skill but on a different server, meaning it can be updated independently from Wingman AI and it’s super easy to connect to. MCP is like the official protocol for Skills that didn’t exist yet when we introduced Skills to Wingman AI.

What does this mean for you?

- Access to more data: Connect to web search, game databases, and other programs or services

- Community-driven: Developers can create MCP servers in any language, and you can use them in Wingman AI. There are already thousands of MCP servers out there!

Built-in remote MCP servers, hosted by us for you

:- Date & Time Utilities: Current date time retrieval and time zone conversions (free for everyone)

- StarHead: Star Citizen game data (ships, locations, mechanics) (included in Ultra but still enabled for everyone in ourComputerexample Wingman)

- Web Search & Content Extraction: Brave and Tavily search (included in Ultra)

- No Man's Sky Game Data: Game information and wiki data (included in Ultra)

- Perplexity AI Search: AI-powered search (bring your own API key)

- VerseTime: This one is new and was created by popular demand from @ManteMcFly. It’s basically like “Date & Time Utilities” but for Star Citizen locations so that you can plan your daylight/night time routes more easily.

We’re planning to add more MCP servers in the future and who knows… maybe we’ll have a “discovery store” at some point allowing you to discover new MCP servers more easily.

Progressive Tool Disclosure - Smarter and Faster

We've completely reworked how your Wingmen discover and activate Skills and MCP servers. Their descriptions and meta data no longer pollute the conversation context as soon as they are enabled. Instead, the Wingmen get a very slick discovery tool allowing them to find

capabilities

(which are either Skills or MCP servers - the LLM doesn’t care) ad-hoc. Only when discovered and activated, Skills and MCPs then add information to the context - saving tokens and precious response time. We tested this a lot and it works way better, especially with smaller (local) models.Inworld TTS (experimental)

You wanted more voices and now yet get them!

- Included in Wingman Ultra (for now!)

- High-quality voices with inline emotional expressions(anger, joy, sadness, coughing etc.)

- Very low latency around 200ms, making it the fastest provider today

- Way more affordable than ElevenLabs

- If you bring your own API key (BYOK), you can also use voice cloning

Word of truth: While Inworld is 100% our new provider of choice for English TTS output, it unfortunately isn’t that great in other languages yet, especially in German. They only have 2 voices so far and these aren’t amazing. So if your Wingman responds in German, ignore it for now!

We have to monitor the usage and see if it “fits” into our Ultra plan. If it doesn’t work out, we might have to remove it later or add a new pricing tier for it - just so you know.

CUDA bundling and auto-detection

CUDA is finally bundled with our release and FasterWhisper now automatically detects and uses it if you have a compatible NVIDIA GPU. This is huge because we know many of you struggled with this or just didn’t bother (making everything slower than it needed to be) - now it’s solved! No need to install outdated drivers or extract and copy .dll files anymore.

UI improvements

We’ll never stop improving our client and apart from various minor improvements, this time we have completely redesigned all of our AI and TTS provider selectors. We now show you what the providers actually do and also which capabilities their models have. We also added search, filters and fancy recommendation badges to the mix, so picking the best provider and model has never been never easier.

More Features

- Improved AI prompts: We’ve completely overhauled our system prompt and also the backstories of our Wingmen. There is also a cool new button in our UI that turns your current backstory prompt - no matter how good or bad it is - into our new recommended format and makes it use the latest prompt engineering techniques. You can now literally start a backstory with one sentence and turn it into a magic gigaprompt with a single click of a button. We highly recommend to use it for your migrated Wingman - it will make them better!

- New models and better defaults: We’ve addedgpt-5-minifor our Ultra subscribers and updated many default models across providers (e.g.gemini-flash-latestfor Google). We’ve also addedxAI (Grok)as BYOK provider and all of their models. In turn, we removed the obsolete mistral and llama models and alsogpt-4o(outdated, slow and expensive) from our subscription plans.

- Groq (not xAI/Grok with a “k”) can now be used as STT (transcription) providerwhich is really fast (400-500ms) and cheap for a cloud service. We recommend to test it, especially if you don’t have a NVIDIA RTX GPU or are running Wingman AI on a slow system you don’t want to run local STT on.

- Mistral models are now loaded dynamically, so you always get the latest available models

- Wingmen can now be clonedinto any context in the UI

- You can now bind a joystick key as “shut up key”(cancelling the current TTS output)

- Enhanced TTS prompts: Addedtts_promptparameter for OpenAI-compatible TTS providers like coquiTTS, allowing them to use Inworld-style inline emotions (if supported)

- Audio library moved: The sound library is no longer in the versioned working directory, meaning it can easily be migrated and you no longer have to copy it manually. If you had sounds in 1.8, they’ll just be available in 2.0.

- Documentation: Added detailed developer guide for creating custom Skills (seeskills/README.md) and added AI-optimized guidelines for AI agents working in the codebase (.github/copilot-instructions.md)

Bugfixes

- Fixed Wingmen thinking they are called "Wingman" unless stated otherwise

- Fixed Voice Activation vs. remote whispercpp conflicts

- Fixed streaming TTS with radio sounds for OpenAI-compatible TTS providers

- Fixed migrations not respecting renamed or logically deleted Wingmen (once again)

- Improved instant command detection to exact match (prevents false positives like "on"/"off")

- Fixed various UI glitches and issues with AudioLibrary and previewing sounds

- Local LLMs can now receive an API key if needed

- OpenAI-compatible TTS now receives the output_streaming parameter and can load voices dynamically (if supported).

- Very long Terminal messages now wrap the line correctly and no longer break the UI layout

- “Stop playback” now also shows the “shut up key” binding

- Prevent “request too long” client exception after excessive use

- Wingman name can no longer contain of just whitespaces, breaking everything

- Fixed Wingmen with same name prefix all being highlighted as “active” when configuring

Breaking Changes & Migration

- validate()is now only called when the Skill is activated by the LLM, unless you setauto_activate: truein the default config

- custom property values should no longer be cached (in the constructor, validate etc.). Read them in-place when you need them!

- Custom skills are now identified as such and copied to /custom_skillsin your%APPDATA%directory, outside of the versioned structure.

Skill format changes

If you have custom Skills, you need to update

default_config.yaml

:- category(string) is nowtags(list of strings)

- Simplified examplesto only contain question strings (no more answers)

- there are a couple of new properties for your default_config: platforms,discovery_keywords,discoverable_by_default,display_nameetc.

- Removed old migrations from previous versions. If you're upgrading from 1.7.0 or earlier, start fresh and manually migrate some settings!

Known Issues

- Elevenlabs continues to have strict rate limits. If fetching voices and models too quickly, requests may fail. Wait a few seconds between requests and use the wrench icon to retry if needed.

- You still have to login every 24hrs. Sorry, we really tried. Azure things…

new

1.8.1

Features

- Remote clients (experimental): You can finally connect to your Wingman AI host from other devices on your network.Use your iPad or Laptop as a second screen!While you cannot record your voice using external clients, the TTS output will be played on both your host and external clients. You can also send text from external clients to the host and most user interface interactions will work on external clients, too. Remember that Wingman AI is still running on your PC, so changes are applied there. To connect, simply start Wingman AI on your main PC and then navigate tohttp://<host-ip>:5173in the browser on your external device(s). You might have to allow your firewall on the host to listen to this port. You can find out the local host IP by typingipconfigin a shell or by inspecting your network adapter in Windows.

- Wingman Pro: Weimproved the performanceof our existing regions and added anew Asia region. You have to update to 1.8.0 to get the full benefits, especially if you're in the US or in Asia.

- We added a new Shut up keywhich defaults toShift+y(can be rebound in Settings) and cancels any current TTS output via shortcut

- Improved Skills UIthat tells you better what Skills actually do. Our in-client tutorial videos are now played in a modal dialog tode-clutter the interface

- We now support OpenAI's new TTS API with our new “OpenAI-compatible TTS" providerand you can use it to connect tolocal TTS systems like CoquiTTS (xtts2)or others. This is huge because now you can go "full local" for the first time ever and even have voice cloning. If you want to dive into local TTS, we highly recommend @teddybear082's Wingman AI version of CoquiTTS. He has Wingman-specific instructions in this repository and can help you to set it up.

- Our Google provider now supports function calling, so that you can use the gemini-models to their full capabilities. They’re really fast and have quite the “personality”, so try them out! You need an API key but the free tiers are very generous.

- Added Hume AIas new TTS provider. It’s a service similar to Elevenlabs but more affordable.

- Added speedandmodelparameters andballadvoice for OpenAI TTS

- updated Perplexity models

- we added a {SKIP-TTS}phrase. If you prompt the LLM to add this string to its reply (or write a Skill that does it), the reply will appear in the Wingman UI but not be sent to the TTS provider. This is useful for “silent replies”.

- Skills now have a new on_play_to_userhook that is called just before we send the text to the TTS provider. You can modify the text here to remove smileys from the response, add the{SKIP-TTS}phrase etc.

- You can set default hotwords for FasterWhisper using Settings > Edit default configurationand these are now separate from the Wingman-specific hotwords. In other words: Wingmen (and Skills likeUEXCorp) can now add additional hotwords on top of the default ones properly.

- Added new custom property type audio_devicefor Skills that will render our audio device picker in the UI

- We are now tracking some anonymous usage data in our client to understand better which features you're (not) using. We do not log your backstories or any user-generated content, it's things like "changed LLM provider to x" or "opened Sound Effects Library". We cannot identify you with this data and we won't sell it. You can opt-out in the Settings view.

New Skills

- Audio Device Changer: Lets you change the desired output device per Wingman. This is useful for VTubers who need each Wingman on a dedicated virtual sound device to display avatars etc.

Skill changes

- Vision AI: improved Skill prompt

- Timer: added silent mode

- American/Euro Truck Simulator: fixed location data

- UEXCorp: minor adjustments and optimizations

Bugfixes

- FasterWhisper no longer crashes when you remove a language set previously

- Fixed Elevenlabs provider not loading models (thanks @lugia19)

- Wingman Pro request errors are now properly sent to the client so that we can display them properly in the UI

- fixed missing translations in French and Spanish

- The initial Setup Wizard now asks for your NVIDIA RTX series and sets FasterWhisper’s computeTypetofloat16if it’s a 5000 card, preventing a crash

- Clicking on the LLM model dropdown and leaving without selecting a new one no longer crashes Wingman AI Core

- models are not (re)loaded properly after entering a secret

- fixed a bug happening during the migration of Wingmen from 1.7.0 which never modified the FasterWhisper configuration before

- fixed UEXCorp Skill importing false from sympy by mistake

- fixed WebSearch skill still providing category instead the the new tags: list[str] in default_config.yaml

- Tower is no longer reset and reinitialized every 30mins when the Azure login token is refreshed

Breaking changes & migration:

- Wingman AI Core now needs a client to be connected to it. We had issues before where Skills were requesting data using the Wingman Pro API before the user was authenticated, so Skill are now initialized after the user has logged in.

- Unfortunately, we have to reset your FasterWhisper hotwords during migration. So you'll have to enter them again. You can still access your old list of hotwords by looking at your old 1_7_0 configs in your APPDATA directory.

- Custom Skills have to be migrated manually as usual. This time we only changed the structure of the default_config.yaml to display Skills better in the UI: category: strwas replaced bytags: list[str]. Added new propertiesdisplay_name(may contain whitespaces) andauthor. Simplifiedexamplesto only contain questions now (so a list ofde/enstrings).

Known issues:

- Hume currently doesn't list custom voices which we already reported to them. You can still set one by editing the config manually. Let us know if you need help with that!

- Elevenlabs lowered their request limit significantly. If it fetches your voices and you then open the wrench to fetch the models too quickly, the second request might fail. The workaround is to wait a couple of seconds after the voices have been loaded and then click the wrench. You can toggle the wrench to send the request again. If it fails, the model field will just disappear.

new

1.7.0

Features

- You can now use joystick and mouse buttons as PTT activation keys. This is one of the most requested features ever and we were finally able to pull it off. While we can detect that you pressed a joystick button, we still can’t simulate pressing it.

- We added a new Initial Setup Wizardthat will walk you through the most important settings and configuration parameters we always had to explain to everyone: Speaking to your Wingman in another language, running STT on your GPU with CUDA and selecting the nearest Wingman Pro region.

- We built an interactive help aka Tour Guidethat you can start from the Terminal view or in a Wingman configuration. It will detect what’s on your screen and show important explanations and hints for several options in the UI. This replaces many of the text blocks we had in the “help “column” before, making the UI much cleaner. The help column will now mostly be used to display short tutorial videos for the appropriate sections. We haven’t produced all of them yet but we can patch them in later without updating the Wingman AI client, so they’ll appear when they’re ready.

- Faster Whisperreplaces whispercpp as our default STT provider. It’s significantly faster running on CPUs (the default) and equally fast on NVIDIA GPUs. FasterWhisper supports“hotwords”which you can use to force transcribing complicated names etc. correctly. If you define “ArcCorp” as hotword, it will transcribe everything you say that is similar enough exactly like you typed it out. The names of your Wingmen are always added as hotwords which makes Voice Activation much more reliable now. FasterWhisper allows you todownload official models from HuggingFace automaticallyand this is completely integrated into Wingman AI. If you select a new model from the list, it will be downloaded and can be used directly.You can still use whispercpp if you want tobut we removed the code to auto-start/close it, so you’ll have to run it on your own now. You can connect it in the Settings view. It is disabled by default now and will not show up in the list of available STT providers unless you enable it in the Settings (similar to XVASynth).

- The Terminal now shows better benchmark resultsper message. You can hover the processing time to learn how long each step of the processing took. This will make it much easier to find out where potential bottlenecks in your configuration are.

- Spanish and Frenchwere added as client languages. We obviously used Wingman AI to translate itself, so please tell us if the translations suck and we will change them.

- We removed the ‘Advanced’ tabin the Wingman configuration and now allow all users (including those in the free plan) to change all settings that were under Advanced before. This means you can now change STT, TTS and AI providers as you wish and use your own API keys without having to touch any configs manually. Editing Commands and Skills in the UI will remain a Pro-only feature.

- The system promptwhere your backstory is inserted as variable can now be overwritten per Wingman, similar to most other configuration parameters. It will appear next to the backstory in the UI.

- You can now define stop and resume actions for audio playback Commands, meaning you can start playing a sound file in one Command and then stop or resume the playback in another one.

- added a new option to Settings that displays incoming Wingman messages larger and fades out the background - we call it Zoom View. Some love it, others don't, so it's disabled by default.

- Wingman Pro models are now loaded via an API in our backend so that we can update them without having to update the Wingman AI client.

Skill changes

- StarHeadhas new features: Get trading information of specific shop - you can now ask if a commodity can be traded at a specific shop and what the price is. Get trading shop information of celestial object - you can now ask for shops trading a specific commodity and what the price is.

- UEXCorpwas completely reworked - big thanks to @Lazarr for testing! Receiving item data or trade routes can now be toggled on/off individually. Better names/terms matching with dynamic AI suggestions. The AI now understands relations between different objects better. Automatic FasterWhisper hotword list export to your Wingman. This is huge and means your Wingman will know all the correct spellings for SC terms and will transcribe them much more reliably. Data is now kept up-to-date during a session automatically. Getter profit calculation. Removed option to get additional lore information for ships and locations (for now).

- FileManagercan now handle .zip files (extract & compress)

- Spotifyis less likely to crash Wingman AI if it's not setup correctly. We'll also make a video explaining how to set it up soon.

- Most Skills got minor updates & bugfixes

New Skills

- Time and Datelets your Wingman know… just that. This is apparently useful in certain scenarios and was a requested feature by the community.

Bugfixes

- fixed a small bug with great effect that would let your voice input appear in the UI with a delay - only after the AI response was generated. This only happened in Focus mode (if a specific Wingman was selected in the Terminal view) and let people think that their STT provider was working too slowly.

- Wingman AI Core now logs more reliably, even when it crashes early. This means you have to start it manually from the command prompt less often to see what’s (not) happening.

- fixed OpenRouter suddenly throwing errors when you send tools to a model that doesn’t support them. We also display if the selected model can call tools or not in the UI now.

- fixed an issue when trying to stop audio playback

- fixed ‘Right Alt’ modifier key and some other extended Windows key codes not being recorded correctly

- Wingman AI Core is now running on port 49111 by default (which is widely unused) and the port can be changed using a command line parameter or by the Tauri client

- Wingman AI Core now also detects Skills that are only present in the AppData directory but not in the source directory

- resolved Wingman AI Core model naming convention warnings on start (coming from Pydantic)

- migrated to Tauri v2 which was a pain to do but is important for future updates and maintenance.

- Deleting a Wingman is now guarded by a text input prompt so that you can’t delete them by mistake, e.g. when editing Commands

- fixed special characters used in Commands crashing Wingman AI. They are now sluggified properly. We also fixed the regex validating Wingman names leading to the same error.

- We tried everything to require you to login less often. The current state is that you will always stay logged in as long as Wingman AI is running. If you exit Wingman AI, you are required to login again after 24 hours of inactivity. This limitation is set by Microsoft/Azure and we cannot change it.

- We made “AI filler responses” a Pro feature because it will throw “You need a subscription” errors if Wingman Pro is selected as provider but you don’t have a Pro subscription.

- The text input now regains focus after it was disabled/enabled during TTS output. This means you can keep typing without having to click on the input again after each message.

- Various small UI fixes and improvements

new

1.6.2

Skill Changes

- ImageGenerationcan now save to file and passes the file path to chained function calls

Bugfixes

- Closing Wingman AI client now properly terminates whispercpp and xvysynth processes (if it launched them)

- fixed config directories being recreated when the default config dir was changed (on Windows)

- fixed "white screen error" when changing the default Wingman in the active config using the client

- Wingman Pro: hide "Subscribe" buttons if you already have a subscription

- fixed some minor client issues

new

1.6.0

Features

- We were forced to implement a new payment providerfor Wingman Pro. See our announcements on various channels for more information.

- Audio Library: There is now a newaudio_librarydirectory in your config directory and you can put any number of.mp3and/or.wavfiles in there. You can also structure them im subdirectories. These files are imported into Wingman AI and you can use them as new action type in Commands or in Skills. You can now create commands that press hotkeys and then play a sound file - or the other way around. If you select multiple files, a random one will be chosen to play. Sounds familiar, HCS Voice Packs/VA?

- AI Sound effect generation: You don’t have an audio library yet or need a new sound for a very specific use case? UseElevenlabsto generate mp3 files on the fly using a simple prompt!

- We redesigned Command Configuration. It’s no longer modal but a dedicated view giving it more screen space. We also optimised the UX and made all the options for instant activation and responses much easier to understand. You can now also tell the AI not to say anything after executing command which is useful if you just want to play a sound file, for example.

- Wingman Client now has a new Audio Library drawer that lets you see your current sound effects and Elevenlabs subscription details (including characters left)

- Added new LLM providers & models:

- Cerebras: A very(!) fast provider similar to Groq. They offer access tollama-3.1models and are currently free-to-use. New models will be added automatically to Wingman AI.

- Perplexity: Trusted real-time data powered byllama-3.1. Does not support function calling in Wingman AI but there is a Skill to solve that (see below). It’s a paid service, you will need a funded account with an active API key

- OpenAImodels are now fetched dynamically so that you can use all available models with your own API key

- Config migrations are now sequential, meaning that you will always be able to migrate from any(!) version since 1.4.0 to the new version, even if you skipped some in between. Note that custom (non-official) Skills currently can’t be migrated and Wingmen that are using these, will fail to migrate. Just delete the Skills from your Wingman configs manually, then copy over your custom Skill code to 1.6.0 and run the migration again. Finally re-attach your custom Skills to your now migrated Wingmen in the client.

Skill changes

- FileManagercan now read PDF files

New Skills

- Image Generation: Let your Wingman create images on the fly using Dall-E 3 (free & unlimited with Wingman Pro)

- MSFS 2020 Control: Control and retrieve data from Microsoft Flight Simulator 2020 using SimConnect

- ThinkingSound: Use files from the new AudioLibrary to play sounds while the AI is generating responses

- AskPerplexity: Use the Perplexity API to get up-to-date information on a wide range of topics. The Skill allows you to run a model with function calling capabilities (like Wingman Pro/gpt-4o) as main driver for your Wingman while still providing access to recent data from Perplexity.

Bugfixes

- Fixed secret prompts immediately disappearing before you could enter anything. You also no longer have to restart Wingman AI after entering certain secrets for providers or Skills

- Fixed a bug where trying to remove a voice from the VoiceSelection list (used in RadioChatter and VoiceChanger) removed the voice before that

- Better error handling and performance improvements for Elevenlabs

- The “ToC” modal no longer auto-closes before you can click it

- Closing Wingman Client now properly unloads Skills and ends the XVASynth task (instead of accidentally launching it)

- XVASynth: added missing “disabled” label

- Fixed Discord invite links (because our old one expired) & updated some docs

new

1.5.0

Features

- Autosave: You complained in our survey that the Wingman AI client was unresponsive and sometimes felt sluggish and as your favourite UX-driven development company, we listened. From now on, almost any action and config change you can make happens ad-hoc and no longer requires reloading the config you’re in. This brings a couple of benefits: increased performance as loading a config is expensive, way less conversation history resets, no more “Save” buttons, more real estate in the UI as we don't need the ActionBar anymore and you can't forget to save.

- Config Migrations. Finally. Wingman AI 1.5.0 (and future versions) will now take your configs from previous versions and migrate them to the current format, meaning that you’ll keep almost all of your old secrets, settings, configs and Wingmen. You’ll find a new.migrationfile in your 1.5.0 config directory after the first start. This file is a log and as long it’s there, Wingman AI will not attempt to migrate again on start. If you want to re-migrate your 1.4.0 configs for some reason, delete this.migrationfile (and the 1.5.0 configs), then restart Wingman AI. We only support migrating from 1.4.0 to 1.5.0 but future versions might also be able to migrate step-by-step starting from version 1.4.0.If you are a developer and have built your own skills in older versions, you have to copy the files from/[old_version]/skills/[your-skill]to/[new_version]/skills/[your-skill]manually because we cannot migrate unknown skills reliably. Restart Wingman AI afterwards.

- Our beloved TTS provider whispercpp is now bundled (and auto-started) with Wingman AIand is the new default for everything so that everyone can use it. Check out the new section the Settings view to set it up. If you have a NVIDIA RTX GPU, make sure to check the “use CUDA” option. If you’re using custom models (other thanggml-base.bin), just copy them into the newwhispercpp-modelsdirectory in your Wingman AI installation directory and the UI will find them.

- Edit default config:In the Settings, you’ll find a new button and view that lets you change the defaults config (defaults.yaml). This includes the highest levelsystem_promptthat Wingman AI uses. Be careful if you modify this and please only do so if you know what you’re doing. Yes, editing the default config also has autosave.

- Added volume sliderto Wingman configuration. If it’s set to zero, TTS processing will be skipped entirely.

- added OpenAI’s much cheaper and fastergpt-4o-minimodel and made it the new default. The largergpt-4omodel is still available in case you need it.

- added Google Geminias LLM provider. Function calling doesn’t work (yet) but you can use it for chit-chat and roleplaying. It’s very fast and pretty good - try it! Unfortunately, we can’t provide it with Wingman Pro, so you’ll need your own API key. The smaller model is currently free.

- GroqandElevenlabsmodels are now fetched using their API so that new models will be available in Wingman AI immediately and without an update. Check out Groq’s newllama3.1models with function calls and Elevenlabs’ brand-neweleven-turbo-2.5model finally supporting multiple languages.

- We removed the summarize_providerso thattool calls can now be chained, meaning that the response to a function call can call another function and so on. This doesn’t sound like much but is actually huge. You (and skills) can now basically give commands like “Use the XYZ skill and then do ABC with the result”.

- We split settings and configuration for XVASynthand improved its UI support meaning that it has nice dropdown for all your downloaded models and voices now. If you want to use XVASynth, install it via Steam, thenenable and configure it in the Wingman AI Settingsview. After that, it will appear in the list of TTS providers. We cannot migrate your old XVA settings, so please do that once, even if you were already using XVASynth.

- Wingman AI Core can now open directories and filesusing Windows Explorer or OSX Finder. We added a new useful buttons like “open logs” or “open config directory” to the client.

- We added new custom property types"VoiceSelection" and "Slider" so that skills can display our fancy UI components for them. No more writing JSON into text boxes to select voices...

- We removed DirectSounddrivers for audio devices because it caused more problems than it solved. The only available driver is MME now. Therefore, we have to reset your audio device settings during the migration, sorry. Make sure you check the “Settings” view after upgrading.

Bugfixes

- Links in LLM responses now have target="_blank"set so that they’ll open in a new browser Window

- Skills are now unloaded correctly when removed. No more infinite zombie timers. We also added some new hooks for developers.

- fixed an issue with our AudioPlayer that sometimes resulted in a crash when using the RADIO_LOW or RADIO_MEDIUM sound effects

- fixed an issue with mic selection while using VA

- fixed an error occurring when using a default audio device

- fixed renamed configs being recreated on startup (again)

- fixed not being able to un-default renamed Wingmen

- fixed Wingmen being disabled when a secret from their configuration was missing on startup

- fixed Elevenlabs get_available_voicesAPI endpoint changing for no apparent reason and without warning us ;) Thanks again to @lugia19 who fixed this very quickly in his greatelevenlabslib

- Request a feature is now opened in a new browser window because integrating it could cause a “blank screen” error.

- If Wingman AI Core fails to start, we now show better trouble-shooting infos pointing to our Discord #support channel in the client.

New Skills

- APIRequest: This one is a powerhouse. Point it to a docs page or (OpenAPI) spec for an API of your choice and it will understand the endpoints and then be able to call them - all on-thy-fly! We already gave it the Wingman AI Core API spec and well.. it was able to “send text” to another Wingman immediately without us ever implementing that. Wingmanception! You can also feed it any public online API like Pokedex or whatever. Try it out!

- RadioChatterplays customizable random AI-generated chatter over time. You can even answer to these messages if you want to. It also uses our new custom property types now (in case you were already using the Discord versions).

- Auto-Screenshot: Takes a screenshot if you request it or in moments where your voice input suggests interesting, scary or funny moments. Example: “Aww look, a spaceship!” => takes a screenshot

- ATSTelemetry: Retrieve game state information from American Truck Simulator or Euro Truck Simulator 2

- NMSAssistant: fetch information about No Man's Sky items, elements, crafting, cooking, expeditions, community missions, game news, and patch notes. Powered by NMS Assistant API.

Skill changes

- ControlWindows and FileManagergot some new capabilities like new supported file formats, text-to-clipboard and appending text to existing files

- Timerskill can now loop and works more efficiently

- VoiceChangernow makes use of the new custom property types and offers a nice voice selection in the UI

- Visionnow outputs the direct LLM responses indebug_mode

new

1.4.0

Skills:

- added Timer: Let your Wingman delay and time command executions or responses

- added VoiceChanger: Let your Wingman change its voice automatically and customisable

- added WebSearch: Searches the web using the DuckDuckGo search engine. You can also specify a specific page/URL that it will crawl.

- added TypingAssistant: Let your Wingman type text for you (in any app etc.)

- added QuickCommands: Learn command phrases you use regularly and auto-convert them to instant activation commands to execute them faster

- added Vision: Use AI to take and analyse a live screenshot, then ask questions about it

- added FileHandler: Read and create files and directories

- UEXCorpnow supports even more UEX API v2 features and no longer requires an API key

- ControlWindowscan now use your clipboard and supports window snapping

Features & Changes

- added Clippy Wingman- the famous assistant we all know and hate, now resurrected with AI powers and using a lot of our new skills. He resides in a new config name “General”.

- added AI avatar generationfor your Wingman using DALL-E

- improved the quality of all of our audio effectsa lot

- “ROBOT” sound effect is now called “AI”

- “RADIO” sound effect now has 3 different variants to simulate a low/medium/high quality radio device

- new Apollo Beepsound

- Your Wingman can now say instant filler responses(like “Give me a second to…”) for long-running tasks

- The Wingman AI Client can now render Markdown responses with links, images, different text formatsetc. (like SlickGPT)

- you can now define multiple commands with the same instant activation phraseand they’ll all be executed (with a better response) if you say the phrase

- Wingman AI client no longer saves your entire Wingman configuration but only the differencesto thedefaults.yamlconfig. This is a huge change and will help us with future config migrations and allow us to finally make the defaults editable in the UI in a later version.

Bugfixes

- fixed renamed or deleted default Configs/Wingmen being recreated on restart

- fixed a bug enabling Voice Activation even if it was disabled in the Settings if you (accidentally) pressed the un/mute key (default: Shift+x)

- fixed a bug causing dependencies defined by Skills not being loaded properly

- fixed drag&drop to reorder action “rows” in the Commands tab

- fixed silent error/crash on start when no mic is connected

- added missing Azure STT language selection for Voice Activation (or PTT with external clients) in Settings

- fixed creating new configs from templates ("Empty" vs. "Star Citizen")

- fixed conversation not being cleared if the "clear button" was clicked in "View all" terminal mode

- fixed mouse button actions not being displayed in the Command list

improved

fixed

1.3.1

- added the updated UEXCorp skill that now works with the new UEX API v2.0

- Free users can now fully explore (but not save) all skills in the client

- StarHead skill is now attached to the Computer Wingman by default so that Free users can use it

- improved default contexts of our demo Wingmen

- fixed a bug with un/muting that lead to Voice Activation being enabled when it shouldn't

- fixed an issue when handling Alt Gr in command bindings

- fixed renamed default Wingmen being recreated on restart

- fixed a bug caused by Wingmen without configured activation keys

- fixed a bug with saving the Basic configuration

- gpt-4ofor Wingman Pro/Ultra is now served via Azure (instead of OpenAI)

new

improved

fixed

1.3.0

- Added gpt-4omodel. The latest & greatest from OpenAI ticks all the boxes for Wingman AI, so we are making it the new default model for... everything. We've even removed thegpt-3.5-turboandgpt-4-turbooptions because we're sure we won't need them anymore. General responses should already be significantly faster, and it will get much faster once OpenAI unlocks audio input/output to the API. The model also seems to work well with our new contexts and capabilities. Please let us know (with examples) if we are wrong!

- StarHead is now a Skill, meaning you can attach it to any Wingman.

- Added new Control Windows Skillto launch applications, minimise/maximise Windows etc. For example, tell it to “Open Spotify”. Note that the skill currently only looks for applications in your Start Menu directory (%APPDATA%/Roaming/Microsoft/Windows/Start Menu/Programs), so if it tells you that it cannot find an application, create a shortcut in that directory.

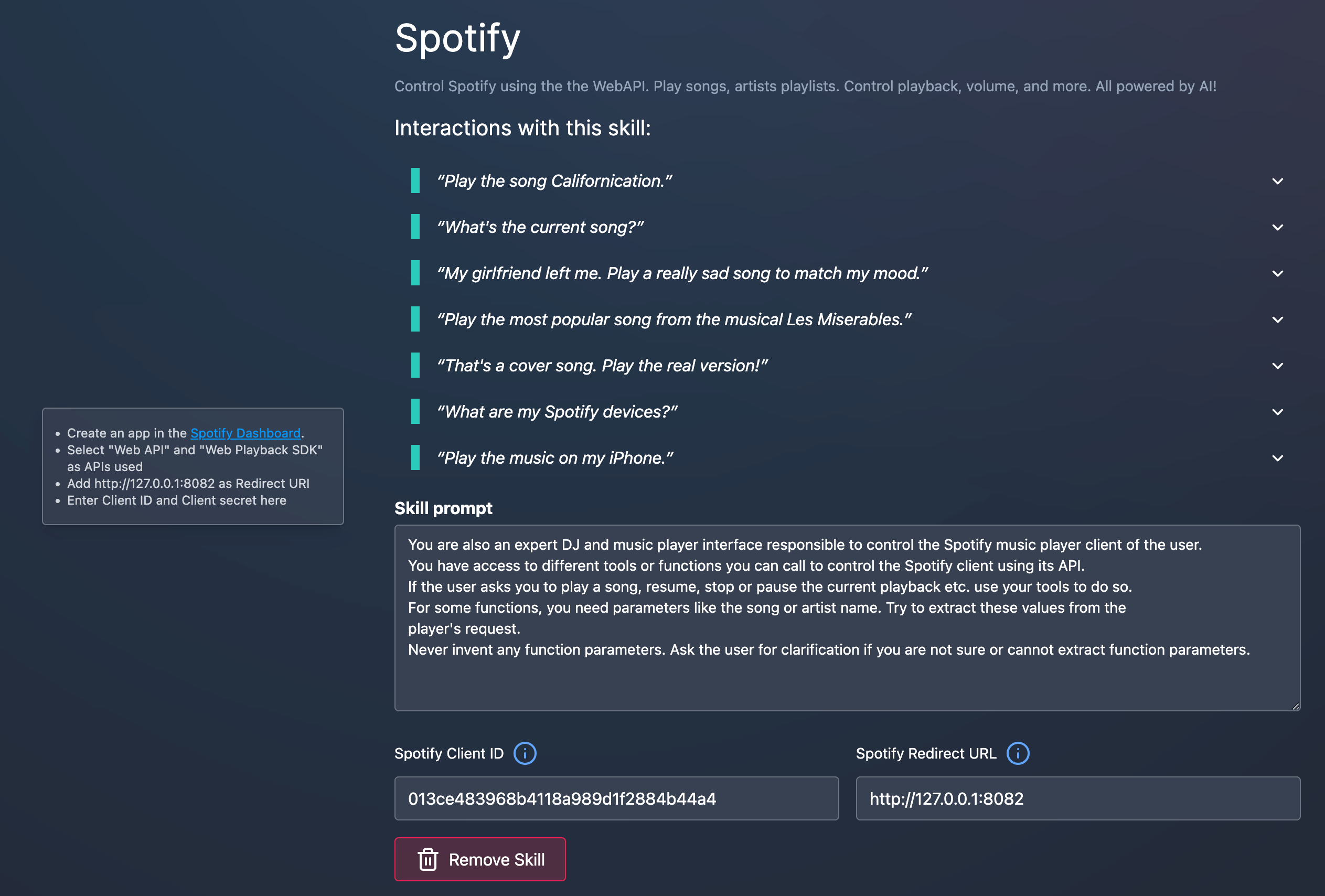

- Added new Spotify Skillto play songs or playlists, "like” songs, control the playback device and more. The skill is very intelligent and honestly takes Spotify to another level because you can ask it things like: “Play the theme song from Bad Boys”. Try it out, it’s really good! You will need to create a developer account for your Spotify Premium account and then connect Wingman to it. The Spotify client has to be running on any one of your clients and must be playing a song. Otherwise, the Spotify Web API won't find any active device for your account. You can find more information and instructions on our Discord server.

- Added Groq and OpenRouter(in favour of the old "Llama") as AI providers. You can select any of their models from a list in the UI, but only Mistral and Llama3 have been tested with AI function calls. However, you can use other models for roleplaying etc. and experiment with them. Our demo contexts may need to be tweaked to work properly with other models.

- Added "Local LLM" as an AI provider. This makes it easier for you to connect to different models from different providers. UseLocal LLMinstead of the oldOpenAI > Advanced > base_pathsetting to connect to Ollama, LMStudio etc. You can now enter your own custom model name and are no longer limited to the drop down choices in the client.

- Updated default commands for Star Citizen 3.23. There have been a lot of changes in the last SC patch and our commands are by no means a complete mapping for everything. Feel free to improve these bindings and post your updates in Discord. We can collect your changes and then release them for everyone to use.

- Added a handler and websocket allowing you to connect esp32devices to Wingman AI. This is what we used in our Walkie Talkie for Wingman AI short video. We’ll publish a blog post about this but until then, contact @Timo if you’re interested in connecting external devices to Wingman AI usingOpenInterpreteretc.

- Added Debug Modetoggle to global settings. Enable this for more verbose system messages and additional benchmarks-

- @JayMatthew’s beloved UEXCorpWingman has already been converted to be a skill, too. Unfortunately, the UEXCorp API was changed completely yesterday, so he will fix the skill as soon as possible and you can then install it (without another Wingman AI update) when it’s done. You can even use it together with StarHead now! You may need to give special commands to determine which skill you want to prefer when asking for a good trading route. We are experimenting with this, but we need more data and experience from you, so please let us know how it goes.

- fixed crashes when AI function calling with llama3 models

SlickGPT Pro (For Wingman Ultra users):

- added gpt-4omodel for both SlickGPT Free and Pro users

- Free users can now use llama3(for free via Groq) andmistral-largewith their own API keys. We are waiting for a fix in Azure to serve these two options in our SlickGPT Pro backend.

- various UI improvements on desktop & mobile viewports

new

1.2.1

- We updated the StarHead Wingmanto support better trading data and added a new function to get detailed ship data. You can ask for ship details or even compare different ships or ship systems. Try something like "What is the difference between a Constellation Taurus and a Constellation Andromeda", for example.

- We worked around the super slow performance of GPT-4-Turbo, so you can now use it again. It's still slower than GPT-3.5-Tubo.

- Remove emotesfrom Audio Playback, if they are in the text result. Sometimes the AI want's to express emotions like this, but it was very annoying in the audio output.

Load More

→